数据采集工具-DataX

0、背景

为了对比 kettle 和 datax 的功能,先部署一个 datax 做一下技术预研(POC)。

需要的软件

(5)测试数据“中国5级行政区域 MySQL 库”代码仓库,数据量为:758049。

1、准备数据库

(1)准备 MySQL 数据库

①系统数据库数据准备

在 MySQL 里面创建一个 datax_web 数据库,字符集为 utf8mb4。

然后将 datax_web.sql 中的数据导入到 MySQL 数据库。

②数据采集数据库数据准备

克隆的“中国5级行政区域 MySQL 库”中的 cnarea20200630.7z 文件解压。

解压后的 cnarea20200630.sql 脚本,导入到 MySQL 的 cnarea20200630 数据库。

数据导入的时候,会报错:[ERR] 2006 - MySQL server has gone away

解决方法:

set global max_allowed_packet=1024*1024*128;

show global variables like 'max_allowed_packet';我的 MySQL 的 max_allowed_packet 配置为:4194304 也就是 1024*1024*4

经过多次报错,一点点往上调整,最终在调整为 1024*1024*128 时,即 134217728 时,导入成功。

(2)准备 PostgreSQL 数据库

pg 数据库作为数据采集的目标库,用来将 MySQL 中 cnarea20200630 数据库数据采集过来。

在 PostgreSQL 中创建一个 test 库备用。

2、DataX Web 使用

(1)登录

输入 http://127.0.0.1:8080/index.html 登录 DataX Web 的网页。

默认账户 admin,默认密码 123456。

登录后会看到一个“运行报表”界面。

(2)添加“数据源”

从菜单点击“数据源管理”菜单,进入“数据源管理界面”。

点击“添加”按钮,添加一个 MySQL 数据源。

录入数据源相关配置信息,点击“测试连接”按钮测试数据源是否可以连通。

从上图弹出提示“Success Tested Successfully”可以看出数据源可连通,点击“确认”。

同样的方法,初始化一个 PostgreSQL 数据库 test 数据源。

(3)DataX任务模板

点击“任务管理”菜单中的“DataX任务模板”菜单,点击“添加”按钮添加一个任务模板。

(4)任务构建

点击“任务管理”菜单中的“任务构建”菜单,来构建一个任务。

步骤一构建 reader:设置数据库源、数据库表名。

第二步构建 writer:设置数据库源、Schema、数据库表名。

第三步字段映射:设置数据库源、数据库表名。

步骤四构建:设置源端字段、目标字段。

点击“下一步”后,会出现 3 个按钮:1.构建、2.选择模板、复制json。

点击“1.构建”后会生成一个 job json。

{

"job": {

"setting": {

"speed": {

"channel": 3,

"byte": 1048576

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "postgresqlreader",

"parameter": {

"username": "XVko54UY9nOe/3JQGQUikw==",

"password": "XCYVpFosvZBBWobFzmLWvA==",

"column": [

"\"id\"",

"\"level\"",

"\"parent_code\"",

"\"area_code\"",

"\"zip_code\"",

"\"city_code\"",

"\"name\"",

"\"short_name\"",

"\"merger_name\"",

"\"pinyin\"",

"\"lng\"",

"\"lat\""

],

"splitPk": "",

"connection": [

{

"table": [

"cnarea_2020"

],

"jdbcUrl": [

"jdbc:postgresql://192.168.1.100:5432/test"

]

}

]

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"username": "yRjwDFuoPKlqya9h9H2Amg==",

"password": "XCYVpFosvZBBWobFzmLWvA==",

"column": [

"`id`",

"`level`",

"`parent_code`",

"`area_code`",

"`zip_code`",

"`city_code`",

"`name`",

"`short_name`",

"`merger_name`",

"`pinyin`",

"`lng`",

"`lat`"

],

"connection": [

{

"table": [

"cnarea_2020"

],

"jdbcUrl": "jdbc:mysql://192.168.1.100:3306/cnarea20200630"

}

]

}

}

}

]

}

}点击“2.选择模板”按钮选择一个任务模板。

选择之后,第二个按钮会变成“DataX任务模板”的“任务ID(任务描述)”。

即变成了“22(晚 10 点每 10 分钟)”,然后点击页面最下面的“下一步”按钮,会创建成功。

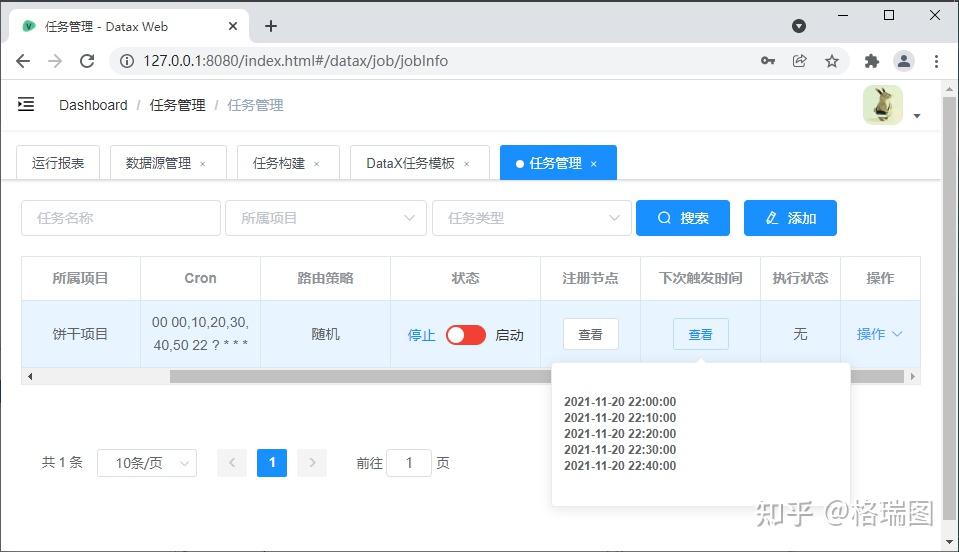

(5)任务管理

点击“任务管理”中的菜单“任务管理”,可以看到上一步创建的任务“cnarea_2020”。

查看其“注册节点”。

查看其“下次触发时间”。

等一会儿看看,是否可以采集,界面操作先到此为止。

接下来来讲解上述 DataX Web 如何部署。

写了半天,发现没有运行,看了一下是因为“状态”列未启动。

点击那个按钮,启动任务。2021-11-20 22:13:36

3、部署 DataX

(1)下载

从这个 datax.tar.gz 地址下载 datax 包,并解压。

4、部署 Hadoop Common

(1)下载 hadoop-2.7.3.tar.gz 并解压

(2)配置 HADOOP_HOME 环境变量

创建一个“HADOOP_HOME”环境变量,其值指向“D:\code\hadoop-2.7.3”。

5、部署 winutils 工具

(1)克隆代码

从“winutils github 代码仓库”克隆代码

code@code MINGW64 /d/code

$ git clone https://github.com/cdarlint/winutils.git

Cloning into 'winutils'...

remote: Enumerating objects: 434, done.

remote: Counting objects: 100% (48/48), done.

remote: Compressing objects: 100% (37/37), done.

remote: Total 434 (delta 19), reused 35 (delta 8), pack-reused 386 eceiving obje

Receiving objects: 100% (434/434), 5.84 MiB | 989.00 KiB/s, done.

Resolving deltas: 100% (312/312), done.

Updating files: 100% (448/448), done.

(2)拷贝可执行程序

将上述“hadoop-2.7.3/bin”目录中的文件,拷贝到“HADOOP_HOME”的 bin 目录。

可以完全覆盖。

(3)创建 CLASSPATH 环境变量

搞了这么多 hadoop 的配置,主要是解决下面的问题:

18:51:15.569 admin [main] ERROR o.a.h.u.Shell - Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

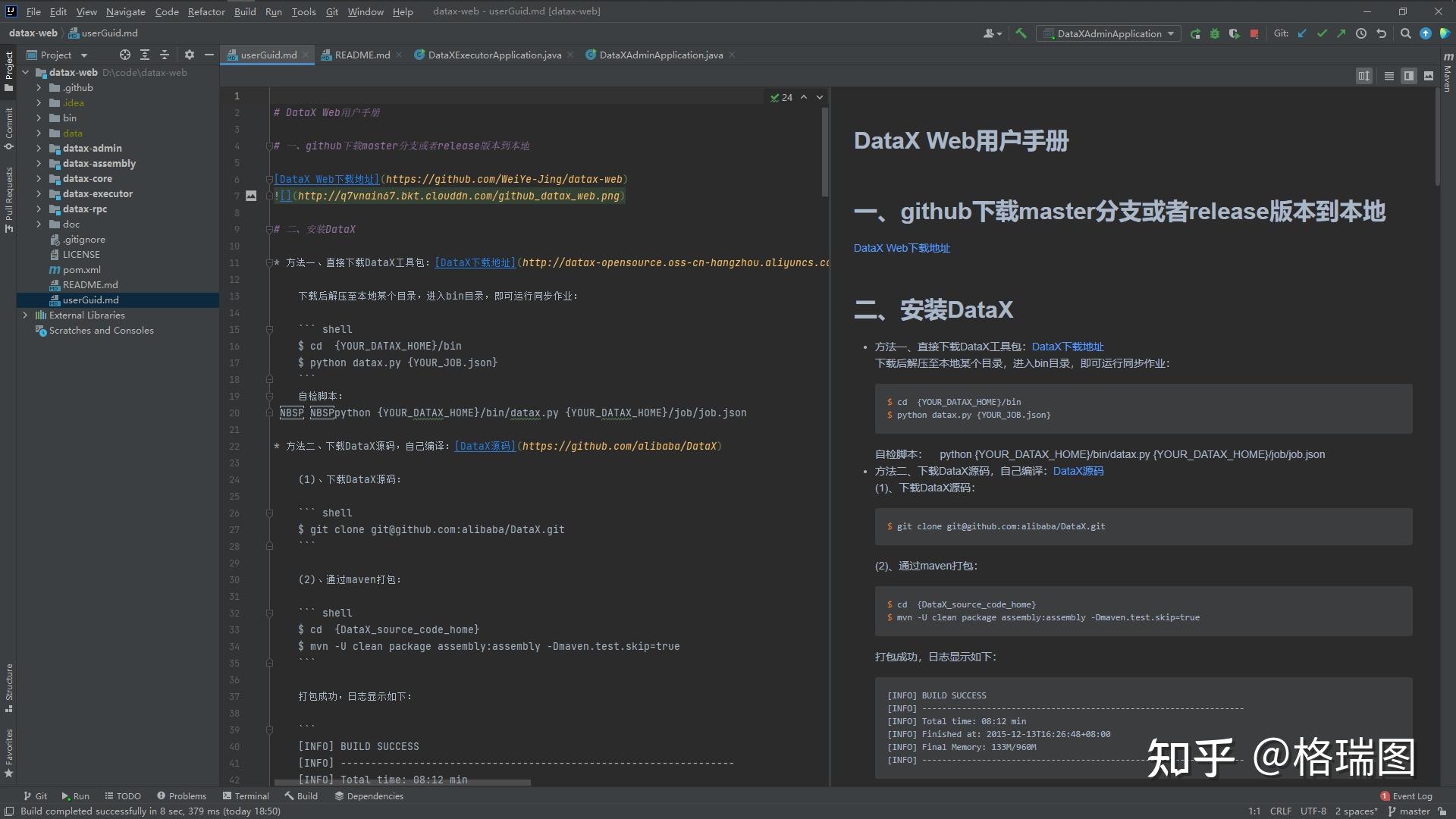

6、编译运行 DataX Web

(1)克隆代码

(2)修改 datax-admin 配置

server:

port: 8080

# port: ${server.port}

spring:

#数据源

datasource:

username: root

password: 123456

url: jdbc:mysql://192.168.1.100:3306/datax_web?serverTimezone=Asia/Shanghai&useLegacyDatetimeCode=false&useSSL=false&nullNamePatternMatchesAll=true&useUnicode=true&characterEncoding=UTF-8

# password: ${DB_PASSWORD:password}

# username: ${DB_USERNAME:username}

# url: jdbc:mysql://${DB_HOST:127.0.0.1}:${DB_PORT:3306}/${DB_DATABASE:dataxweb}?serverTimezone=Asia/Shanghai&useLegacyDatetimeCode=false&useSSL=false&nullNamePatternMatchesAll=true&useUnicode=true&characterEncoding=UTF-8

driver-class-name: com.mysql.jdbc.Driver

hikari:

## 最小空闲连接数量

minimum-idle: 5

## 空闲连接存活最大时间,默认600000(10分钟)

idle-timeout: 180000

## 连接池最大连接数,默认是10

maximum-pool-size: 10

## 数据库连接超时时间,默认30秒,即30000

connection-timeout: 30000

connection-test-query: SELECT 1

##此属性控制池中连接的最长生命周期,值0表示无限生命周期,默认1800000即30分钟

max-lifetime: 1800000

# datax-web email

mail:

host: smtp.qq.com

port: 25

username: gree2@qq.com

password: xxxxxxxx

# username: ${mail.username}

# password: ${mail.password}

properties:

mail:

smtp:

auth: true

starttls:

enable: true

required: true

socketFactory:

class: javax.net.ssl.SSLSocketFactory

management:

health:

mail:

enabled: false

server:

servlet:

context-path: /actuator

mybatis-plus:

# mapper.xml文件扫描

mapper-locations: classpath*:/mybatis-mapper/*Mapper.xml

# 实体扫描,多个package用逗号或者分号分隔

#typeAliasesPackage: com.yibo.essyncclient.*.entity

global-config:

# 数据库相关配置

db-config:

# 主键类型 AUTO:"数据库ID自增", INPUT:"用户输入ID", ID_WORKER:"全局唯一ID (数字类型唯一ID)", UUID:"全局唯一ID UUID";

id-type: AUTO

# 字段策略 IGNORED:"忽略判断",NOT_NULL:"非 NULL 判断"),NOT_EMPTY:"非空判断"

field-strategy: NOT_NULL

# 驼峰下划线转换

column-underline: true

# 逻辑删除

logic-delete-value: 0

logic-not-delete-value: 1

# 数据库类型

db-type: mysql

banner: false

# mybatis原生配置

configuration:

map-underscore-to-camel-case: true

cache-enabled: false

call-setters-on-nulls: true

jdbc-type-for-null: 'null'

type-handlers-package: com.wugui.datax.admin.core.handler

# 配置mybatis-plus打印sql日志

logging:

level:

com.wugui.datax.admin.mapper: info

path: ./data/applogs/admin

# level:

# com.wugui.datax.admin.mapper: error

# path: ${data.path}/applogs/admin

#datax-job, access token

datax:

job:

accessToken:

#i18n (default empty as chinese, "en" as english)

i18n:

## triggerpool max size

triggerpool:

fast:

max: 200

slow:

max: 100

### log retention days

logretentiondays: 30

datasource:

aes:

key: AD42F6697B035B75(3)修改 datax-executor 配置

# web port

server:

# port: ${server.port}

port: 8081

# log config

logging:

config: classpath:logback.xml

# path: ${data.path}/applogs/executor/jobhandler

path: ./data/applogs/executor/jobhandler

datax:

job:

admin:

### datax admin address list, such as "http://address" or "http://address01,http://address02"

addresses: http://127.0.0.1:8080

# addresses: http://127.0.0.1:${datax.admin.port}

executor:

appname: datax-executor

ip: 192.168.1.9

port: 9999

# port: ${executor.port:9999}

### job log path

logpath: ./data/applogs/executor/jobhandler

# logpath: ${data.path}/applogs/executor/jobhandler

### job log retention days

logretentiondays: 30

### job, access token

accessToken:

executor:

jsonpath: D:\\code\\datax\\job

# jsonpath: ${json.path}

pypath: D:\\code\\datax\\bin\\datax.py

# pypath: ${python.path}

# pypath: ${python.path}(4)运行

...

18:51:27.066 admin [main] INFO o.s.b.w.e.t.TomcatWebServer - Tomcat started on port(s): 8080 (http) with context path ''

18:51:27.073 admin [main] INFO c.w.d.a.DataXAdminApplication - Started DataXAdminApplication in 26.306 seconds (JVM running for 29.794)

18:51:27.105 admin [main] INFO c.w.d.a.DataXAdminApplication - Access URLs:

----------------------------------------------------------

Local-API: http://127.0.0.1:8080/doc.html

External-API: http://172.26.240.1:8080/doc.html

web-URL: http://127.0.0.1:8080/index.html

----------------------------------------------------------

18:51:39.572 admin [http-nio-8080-exec-1] INFO o.a.c.c.C.[.[.[/] - Initializing Spring DispatcherServlet 'dispatcherServlet'

18:51:39.572 admin [http-nio-8080-exec-1] INFO o.s.w.s.DispatcherServlet - Initializing Servlet 'dispatcherServlet'

18:51:39.591 admin [http-nio-8080-exec-1] INFO o.s.w.s.DispatcherServlet - Completed initialization in 18 ms

...

至此算是完成初步的预研,根据官方文档,前面执行的任务不会成功。

原因如下:

1、测试机用的 Anaconda 3 部署的 python 环境,其版本为 python 3.8.8

2、DataX 的文档说,默认配置的是 python 2.7,要使用 python 3 ,需要单独复制脚本。

综合上述,可以推断执行会失败。

=================================================================

2021-12-11 22:37:17

今晚在新本本上重新搞了一下环境

跑了一下,请参考下发的运行日志

Python3 支持

拷贝 datax-web/doc/datax-web/datax-python3 下 3 个 py 文件

替换 datax/bin 下面 3 个 py 文件

2021-12-11 22:28:27 [JobThread.run-130] <br>----------- datax-web job execute start -----------<br>----------- Param:

2021-12-11 22:28:27 [BuildCommand.buildDataXParam-100] ------------------Command parameters:

2021-12-11 22:28:27 [ExecutorJobHandler.execute-57] ------------------DataX process id: 18292

2021-12-11 22:28:27 [ProcessCallbackThread.callbackLog-186] <br>----------- datax-web job callback finish.

2021-12-11 22:28:27 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:27 [AnalysisStatistics.analysisStatisticsLog-53] DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

2021-12-11 22:28:27 [AnalysisStatistics.analysisStatisticsLog-53] Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2021-12-11 22:28:27 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:27 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.162 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.167 [main] INFO Engine - the machine info =>

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] osInfo: Oracle Corporation 1.8 25.202-b08

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] jvmInfo: Windows 10 amd64 10.0

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] cpu num: 16

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] totalPhysicalMemory: -0.00G

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] freePhysicalMemory: -0.00G

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] maxFileDescriptorCount: -1

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] currentOpenFileDescriptorCount: -1

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] GC Names [PS MarkSweep, PS Scavenge]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] MEMORY_NAME | allocation_size | init_size

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] PS Eden Space | 256.00MB | 256.00MB

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] Code Cache | 240.00MB | 2.44MB

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] Compressed Class Space | 1,024.00MB | 0.00MB

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] PS Survivor Space | 42.50MB | 42.50MB

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] PS Old Gen | 683.00MB | 683.00MB

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] Metaspace | -0.00MB | 0.00MB

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.187 [main] INFO Engine -

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] {

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "content":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] {

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "reader":{

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "name":"mysqlreader",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "parameter":{

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "column":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`id`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`level`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`parent_code`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`area_code`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`zip_code`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`city_code`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`name`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`short_name`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`merger_name`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`pinyin`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`lng`",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "`lat`"

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ],

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "connection":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] {

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "jdbcUrl":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "jdbc:mysql://home:3307/cnarea20200630"

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ],

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "table":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "cnarea_2020"

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ],

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "password":"******",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "splitPk":"id",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "username":"root"

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] },

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "writer":{

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "name":"postgresqlwriter",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "parameter":{

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "column":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"id\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"level\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"parent_code\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"area_code\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"zip_code\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"city_code\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"name\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"short_name\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"merger_name\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"pinyin\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"lng\"",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "\"lat\""

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ],

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "connection":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] {

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "jdbcUrl":"jdbc:postgresql://home:5432/datax",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "table":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "public.cnarea_2020"

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ],

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "password":"******",

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "preSql":[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "delete from cnarea_2020"

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ],

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "username":"postgres"

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ],

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "setting":{

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "errorLimit":{

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "percentage":0.02,

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "record":0

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] },

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "speed":{

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "byte":1048576,

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] "channel":3

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] }

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.203 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.204 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.205 [main] INFO JobContainer - DataX jobContainer starts job.

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.206 [main] INFO JobContainer - Set jobId = 0

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.480 [job-0] INFO OriginalConfPretreatmentUtil - Available jdbcUrl:jdbc:mysql://home:3307/cnarea20200630?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true.

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.495 [job-0] INFO OriginalConfPretreatmentUtil - table:[cnarea_2020] has columns:[id,level,parent_code,area_code,zip_code,city_code,name,short_name,merger_name,pinyin,lng,lat].

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.581 [job-0] INFO OriginalConfPretreatmentUtil - table:[public.cnarea_2020] all columns:[

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] id,level,parent_code,area_code,zip_code,city_code,name,short_name,merger_name,pinyin,lng,lat

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ].

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.607 [job-0] INFO OriginalConfPretreatmentUtil - Write data [

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] INSERT INTO %s ("id","level","parent_code","area_code","zip_code","city_code","name","short_name","merger_name","pinyin","lng","lat") VALUES(?,?,?,?,?,?,?,?,?,?,?,?)

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] ], which jdbcUrl like:[jdbc:postgresql://home:5432/datax]

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.607 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.607 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do prepare work .

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.608 [job-0] INFO JobContainer - DataX Writer.Job [postgresqlwriter] do prepare work .

2021-12-11 22:28:28 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:28.619 [job-0] INFO CommonRdbmsWriter$Job - Begin to execute preSqls:[delete from cnarea_2020]. context info:jdbc:postgresql://home:5432/datax.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.778 [job-0] INFO JobContainer - jobContainer starts to do split ...

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.779 [job-0] INFO JobContainer - Job set Max-Byte-Speed to 1048576 bytes.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.787 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] splits to [1] tasks.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.788 [job-0] INFO JobContainer - DataX Writer.Job [postgresqlwriter] splits to [1] tasks.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.810 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.815 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.817 [job-0] INFO JobContainer - Running by standalone Mode.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.830 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [1] channels for [1] tasks.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.834 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.835 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.847 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:31.851 [0-0-0-reader] INFO CommonRdbmsReader$Task - Begin to read record by Sql: [select `id`,`level`,`parent_code`,`area_code`,`zip_code`,`city_code`,`name`,`short_name`,`merger_name`,`pinyin`,`lng`,`lat` from cnarea_2020

2021-12-11 22:28:31 [AnalysisStatistics.analysisStatisticsLog-53] ] jdbcUrl:[jdbc:mysql://home:3307/cnarea20200630?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

2021-12-11 22:28:41 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:41.851 [job-0] INFO StandAloneJobContainerCommunicator - Total 0 records, 0 bytes | Speed 0B/s, 0 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.000s | Percentage 0.00%

2021-12-11 22:28:51 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:28:51.852 [job-0] INFO StandAloneJobContainerCommunicator - Total 96768 records, 8931326 bytes | Speed 872.20KB/s, 9676 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 9.596s | All Task WaitReaderTime 0.239s | Percentage 0.00%

2021-12-11 22:29:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:01.868 [job-0] INFO StandAloneJobContainerCommunicator - Total 197120 records, 18204903 bytes | Speed 905.62KB/s, 10035 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 19.407s | All Task WaitReaderTime 0.397s | Percentage 0.00%

2021-12-11 22:29:11 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:11.877 [job-0] INFO StandAloneJobContainerCommunicator - Total 295424 records, 27029877 bytes | Speed 861.81KB/s, 9830 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 29.157s | All Task WaitReaderTime 0.547s | Percentage 0.00%

2021-12-11 22:29:21 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:21.890 [job-0] INFO StandAloneJobContainerCommunicator - Total 389632 records, 35786259 bytes | Speed 855.12KB/s, 9420 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 39.115s | All Task WaitReaderTime 0.691s | Percentage 0.00%

2021-12-11 22:29:31 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:31.893 [job-0] INFO StandAloneJobContainerCommunicator - Total 485888 records, 44464293 bytes | Speed 847.46KB/s, 9625 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 49.023s | All Task WaitReaderTime 0.836s | Percentage 0.00%

2021-12-11 22:29:41 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:41.895 [job-0] INFO StandAloneJobContainerCommunicator - Total 576000 records, 52520355 bytes | Speed 786.72KB/s, 9011 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 58.670s | All Task WaitReaderTime 0.985s | Percentage 0.00%

2021-12-11 22:29:51 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:51.902 [job-0] INFO StandAloneJobContainerCommunicator - Total 664064 records, 60429822 bytes | Speed 772.41KB/s, 8806 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 68.620s | All Task WaitReaderTime 1.128s | Percentage 0.00%

2021-12-11 22:29:51 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:51.996 [0-0-0-reader] INFO CommonRdbmsReader$Task - Finished read record by Sql: [select `id`,`level`,`parent_code`,`area_code`,`zip_code`,`city_code`,`name`,`short_name`,`merger_name`,`pinyin`,`lng`,`lat` from cnarea_2020

2021-12-11 22:29:51 [AnalysisStatistics.analysisStatisticsLog-53] ] jdbcUrl:[jdbc:mysql://home:3307/cnarea20200630?yearIsDateType=false&zeroDateTimeBehavior=convertToNull&tinyInt1isBit=false&rewriteBatchedStatements=true].

2021-12-11 22:29:52 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:52.353 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[80507]ms

2021-12-11 22:29:52 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:29:52.354 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.909 [job-0] INFO StandAloneJobContainerCommunicator - Total 758049 records, 70508004 bytes | Speed 984.20KB/s, 9398 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 78.400s | All Task WaitReaderTime 1.289s | Percentage 100.00%

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.910 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.910 [job-0] INFO JobContainer - DataX Writer.Job [postgresqlwriter] do post work.

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.910 [job-0] INFO JobContainer - DataX Reader.Job [mysqlreader] do post work.

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.910 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.911 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: D:\code\datax\datax\hook

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.912 [job-0] INFO JobContainer -

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] [total cpu info] =>

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] averageCpu | maxDeltaCpu | minDeltaCpu

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] -1.00% | -1.00% | -1.00%

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] [total gc info] =>

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] PS Scavenge | 19 | 19 | 19 | 0.088s | 0.088s | 0.088s

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.912 [job-0] INFO JobContainer - PerfTrace not enable!

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.912 [job-0] INFO StandAloneJobContainerCommunicator - Total 758049 records, 70508004 bytes | Speed 765.06KB/s, 8422 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 78.400s | All Task WaitReaderTime 1.289s | Percentage 100.00%

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 2021-12-11 22:30:01.914 [job-0] INFO JobContainer -

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 任务启动时刻 : 2021-12-11 22:28:28

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 任务结束时刻 : 2021-12-11 22:30:01

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 任务总计耗时 : 93s

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 任务平均流量 : 765.06KB/s

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 记录写入速度 : 8422rec/s

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 读出记录总数 : 758049

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53] 读写失败总数 : 0

2021-12-11 22:30:01 [AnalysisStatistics.analysisStatisticsLog-53]

2021-12-11 22:30:01 [JobThread.run-165] <br>----------- datax-web job execute end(finish) -----------<br>----------- ReturnT:ReturnT [code=200, msg=LogStatistics{taskStartTime=2021-12-11 22:28:28, taskEndTime=2021-12-11 22:30:01, taskTotalTime=93s, taskAverageFlow=765.06KB/s, taskRecordWritingSpeed=8422rec/s, taskRecordReaderNum=758049, taskRecordWriteFailNum=0}, content=null]

2021-12-11 22:30:02 [TriggerCallbackThread.callbackLog-186] <br>----------- datax-web job callback finish.7、打包

后续将上述部署相关的各种包,整理一下打包上传到百度云。

并补充 python 3 相关的配置。

=================================================================

2021-12-11 22:45:22

链接:https://pan.baidu.com/s/1iyIo9BoASDA6NIcVV6Y65Q

提取码:sclo